WHO?

Matlab Computer Vision 3 weeks 2020

ABOUT

WHO? is a face recognition application to identify 48 individuals in my Computer Vision course. It even includes a filter that censors people’s faces by sprouting flowers in their eyes. The following face-recognition models are implemented via Matlab:

- SURF-SVM feature classifier

- HOG-SVM feature classifier

- CNN classifier

PROCESS

The diagram on the left shows the procedure for making a face detection classifier, using the HOG-SVM as the example.

[1] Face Database

The face database includes a series of photos of my classmates holding a piece of paper with an identification number. To organize the photos with their corresponding identities, I developed a helper function using ocr to automatically read the identification number and categorize the image into its own folder. The Viola-Jones object detector is used to detect and crop the face in the photo.

[2] Feature Extraction

After the face database is generated, features need to be extracted from the detected face. For the HOG-SVM classifier for instance, a feature vector of HOG features are extracted using extractHOGFeatures.

[3] Modelling

After feature extraction, the SVM classifier is trained using the Matlab function fitcecoc, which uses K(K-1)/2 binary SVM models to create an ECOC model.

[4] Testing

The classifier is then tested on an unknown image. The image is first processed by registering the face using the Viola-Jones object detector and then extracting HOG features. The face is then classified using the trained HOG-SVM classifier and annotated accordingly. Other than the HOG-SVM classifier, a SURF-SVM and CNN model have also been trained.These models have been incorporated into a single function called RecognizeFace, as seen on the right.

[P] = RecognizeFace( InputImage, FeatureType, ClassifierType, CreativeMode)

- P = (N x 3) matrix where N is the number of people detected and the three columns represent: student ID, center of face x, and center of face y.

- InputImage = input

- FeatureType = ie. HOG, SURF

- ClassifierType = ie. SVM, CNN

- CreativeMode = toggles face filter on/offf

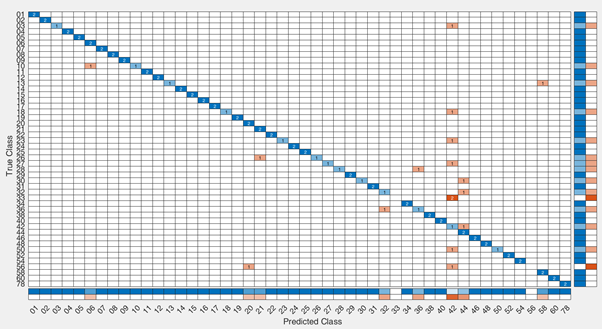

[5] Evaluation

The classifier is evaluated by generating a confusion matrix for the predicted data against the known data. The confusion chart on the right shows the evaluation as tested against a set of unknown individual images of classmates.

FACE FILTER

Image Augmentation

[6] Image Augmentation

An eye detector is generated using the Viola-Jones left and right eye detectors. To ensure that the same eye is not detected twice for an face, I applied bboxOverlapRatio. Regarding the superimposing of the flowers onto the detected eyes, I replaced the pixels of the input image with the resized flower image.

Sources

https://uk.mathworks.com

https://www.freepik.com/free-photos-vectors/flower